Introduction

Why does Google insist on making it’s assistant situation so bad?

In theory, assistant should be the best it’s ever been. It’s better at “understanding” what I ask for, and yet it less capable than ever to do so.

This post is a rant about my experience using modern assistants on Android, and why, while I used to use these features actively in the mid-to-late-2010s, I now don’t even bother with them.

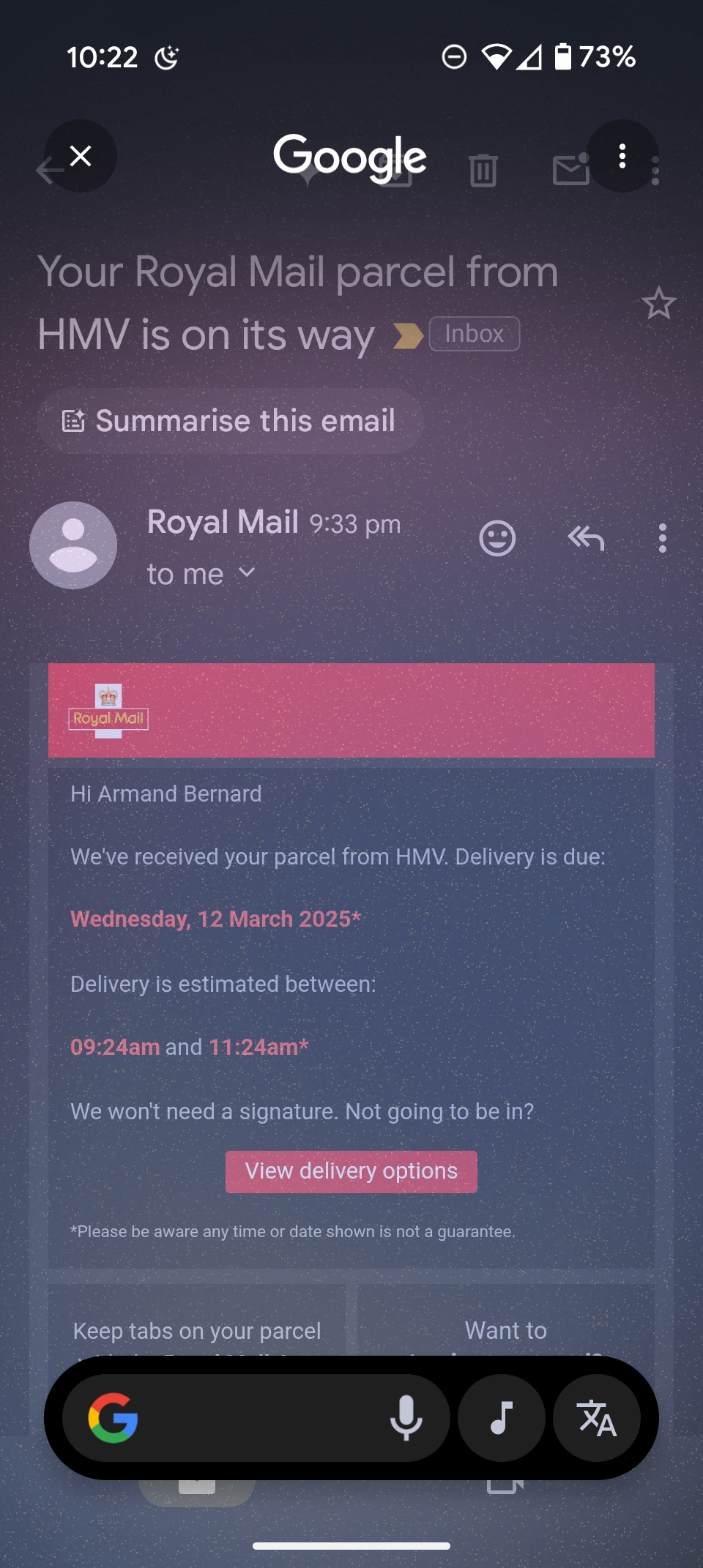

The task

Back in the late 2010s, I used to be able to hold the home button and ask the Google Assistant to create an event based on this email. It would grab the context from my screen, and do exactly that. This has been impossible, as far as I can tell, to do for years now.

Trying to find the “right” assistant

At some point, my phone stopped responding to “OK Google”. I still don’t know why it won’t work.

Holding down the Home bar (the home button went the way of the dodo) brings up an assistant-style UI, but it’s dumb as bricks and only Googles the web. Useless.

So, I installed Gemini. I asked it to perform a basic task. It responded “in live mode, I cannot do that”. Asking it how I can get it to create me a calendar event, it could not answer the question. Saying instead to open my calendar app and create a new event. I know how to use a calendar. I want it to justify its existence by providing more value than a Google search. It was ultimately unable to answer the question.

Searching the internet, apparently both of the ways I had been using assistant features were the wrong way to do it. You have to hold down the power button, that’s how to launch the proper one. My internal response was:

No, that’s for the power menu. I don’t want to dedicate it to Assistant.

Well, apparently, that’s the only way to do it now, so there I go sacrificing another convenience turning it on.

Pulling teeth with Gemini

So I ask this power-menu-version of Gemini to do the same simple task. I tried 4 separate times.

First, it created a random event “Meeting with a client” on a completely different day (what?).

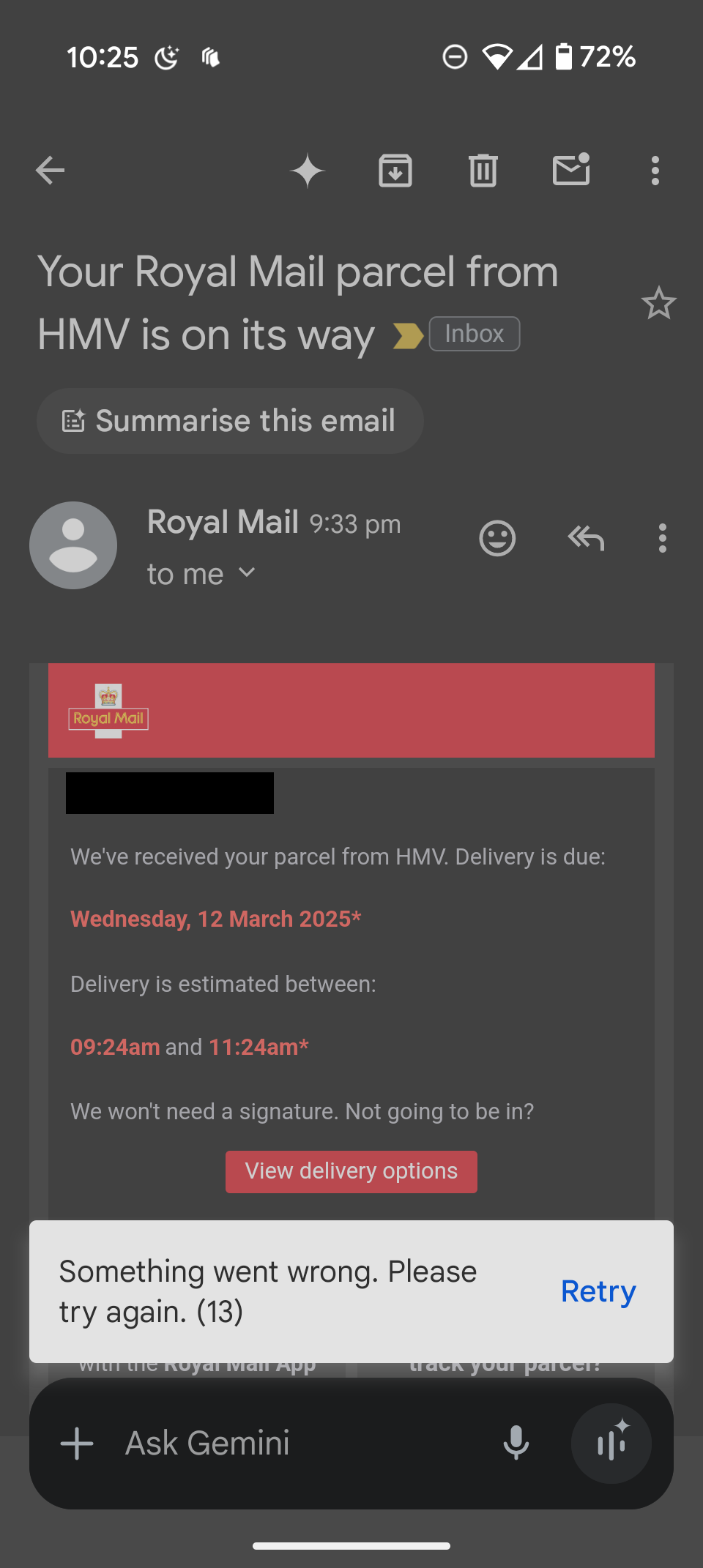

Second time it just crashed with an error.

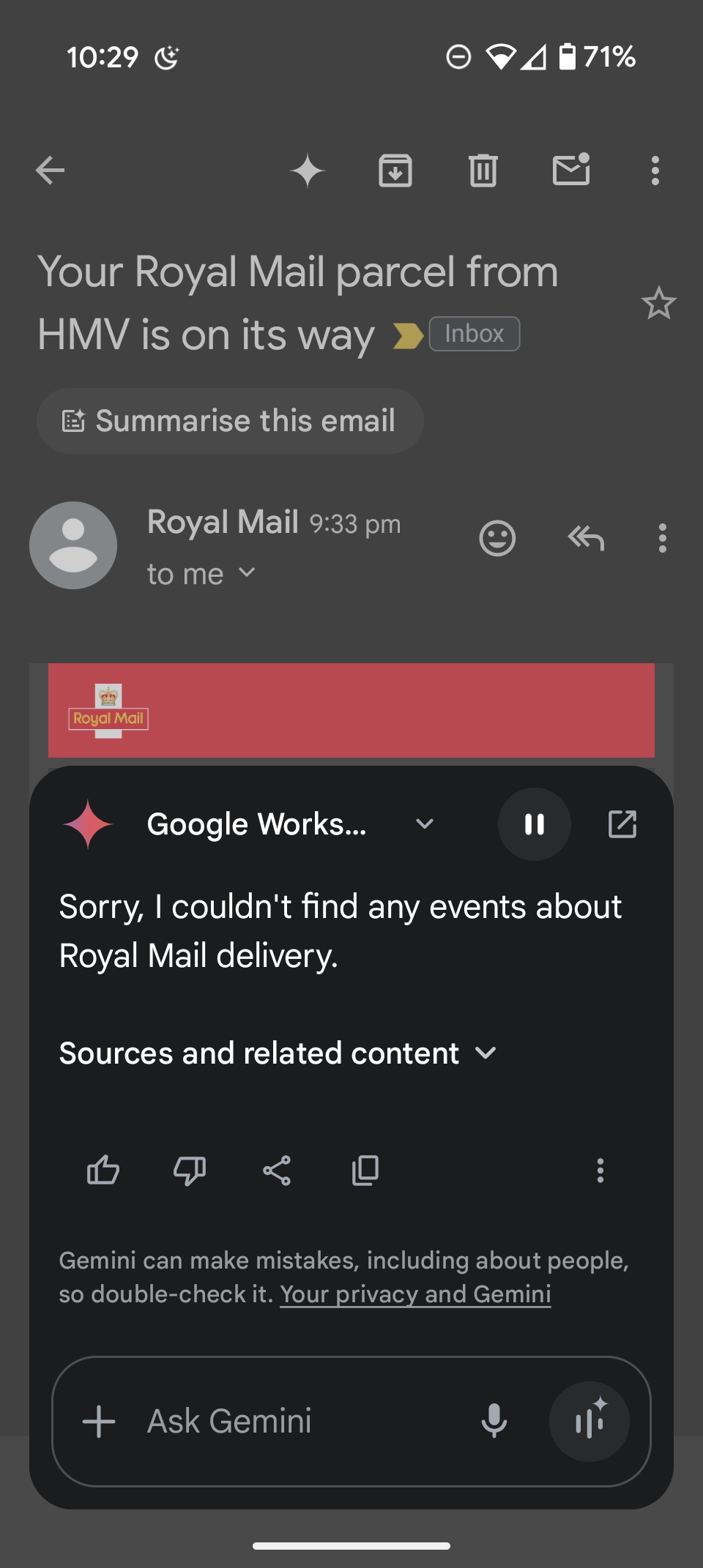

The third time, it asked me which email to use, giving me a list, but that list did not contain the email I was interested in. I asked it to find the Royal Mail one. No success.

So, quite clearly, it wasn’t using screen content.

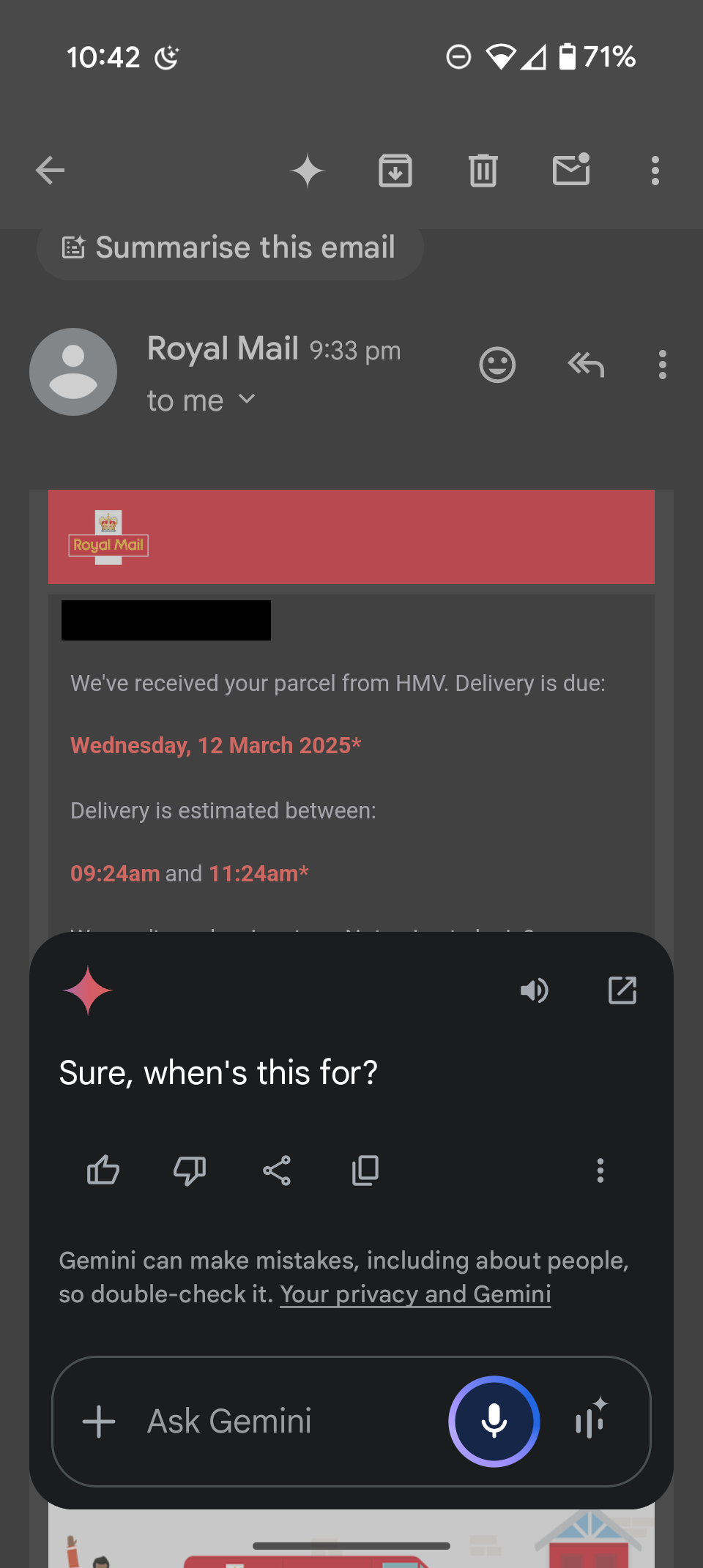

I rephrased the question: “Please create an event from the content on my screen”. It replied “Sure, when’s this for?”

I shouldn’t have to tell you. That’s the point. It’s right there.

Conclusion

There are too many damn assistant versions, and they are all bad. I can’t even imagine what it’s like to also have Bixby in the mix as a Samsung user. (Feel free to let me know below.)

It seems like none of them are able to pull context from what you are doing anymore, and you’ll spend more time fiddling and googling how to make them work than it would take for you to do the task yourself.

In some ways, assistants have gotten worst than almost 10 years ago, despite billions in investments.

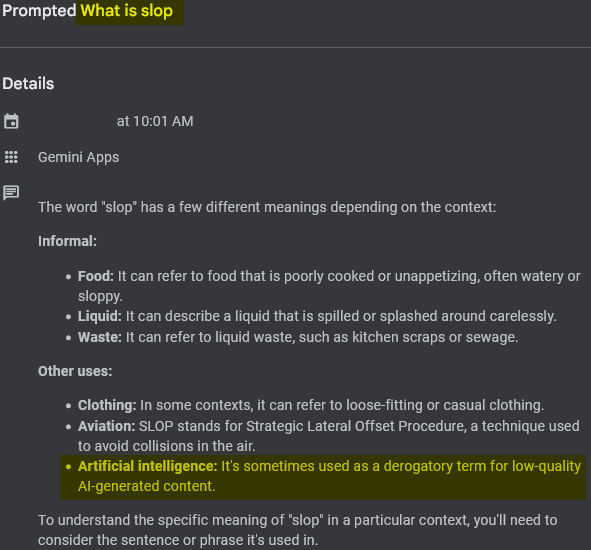

As a little bonus, the internet is filled with AI slop that makes finding out real facts, real studies from real people harder than ever.

I write this all mostly to blow off steam, as this stuff has been frustrating me for years now. Let me know what your experience has been like below, I could use some camaraderie.

The Assistan/Nest devices have gone to shit as well. When I first bought one about 5 years ago, it could:

- play many games (voice-controlled text adventures, multi-player gameshow-style trivia and party games, etc.);

- play music from my Google Music Library library with no commercials;

- play podcasts from 3rd party podcast providers;

- play almost any radio station that also had livestream feeds

Now all the games are gone, and ‘podcasts’ are just the small subset of podcasters who also upload their episodes to Youtube (whatever feed they used to use had almost every podcaster there was, even obscure ones); asking for specific episodes by episode number or date is totally broken. Playing radio stations is hit or miss, the voice recognition often picks a stream completely unrelated to the one I ask for; it is waaay worse at matching the callsign/city I ask for.

Playing music is intolerable. There’s a stupid commercial for Youtube Music Premium after nearly every track. NO, I will not upgrade to your freaking Youtube Premium.

Enshittification, thy name is Google.

Have you heard of Homeassistant? It’s a self-hosted smart home solution that fills a lot of the gaps left by the most smart home tech. They’ve recently added and refined support for various different voice assistants, some of which run completely on your hardware. I have found they have great community support for this project and you can also buy their hardware if you don’t feel like tinkering on a Raspberry Pi or VM. The best thing (IMHO) about Homeassistant is that it is FOSS.

I got their voice widget, its slow and stupid.

Need to figure out how to connect it to a gpu

NetworkChuck has a video explaining how to configure Home Assistant with voice, using Raspberry Pi and self-hosted LLM.

Voice control of devices you have in home assistant is cool, but I don’t think I would recommend it to an average person who uses Google assistant. Sure it can turn the lights on and off if it’s aware of those entities, but this user is describing playing games, asking for media streams, podcasts, all things home assistant voice does not support (certainly not out of the box).

Fair points! I’ve been tinkering with Homeassistant for a while now. The community has come very far so I’m hopeful that more advanced features will be added as the user base grows.

Agreed! I’d say it’s moved from advanced to tinkerer level now, where if you have a use case and know it’s limitations, it can be pretty neat.

I have heard of it yeah! Definitely want to try it out… just haven’t gotten around to it yet.

Do you find the voice recognition is decent?

Yes, the voice recognition is decent. I mainly wanted a way to control some smart light switches without using a Google device. If you’re looking for something more advanced I don’t have any experience using his tool in that use-case.

This is by design. They’re trying to frustrate you, because they’ll then upsell you on the “premium” subscription later on that returns the “old ways,” all while mining your data. And if you continue to use the free stuff, they’ll just mine you harder with AI.

They’re angling for a rent-based economy where they’re the landowners and we’re the sharecroppers paying to use their stuff. The only way out is to de-google and start taking your privacy seriously.

That’s an optimistic view.

But really, that’s just Google being Google.

Even years before the “AI” hype their Assistant kept suddenly losing features that worked perfectly fine before.Just like Android loses features on every major version, and Maps is a skeleton of its former self.

In a company where nobody is incentivised to maintain anything, cutting features is the easier option.

the thing about degoogled Android is SafetyNet Support. So, if you rely on digital banks then it is serious issue.

Hey thanks. That’s something I didn’t even know existed, and it’s something I’ll have to look into before I make the switch.

Google as an organization is simply dysfunctional. Everything they make is either some cowboy bullshit with no direction, or else it’s death by committee à la Microsoft.

Google has always had a problem with incentives internally, where the only way to get promoted or get any recognition was to make something new. So their most talented devs would make some cool new thing, and then it would immediately stagnate and eventually die of neglect as they either got their promotion or moved on to another flashy new thing. If you’ve ever wondered why Google kills so many products (even well-loved ones), this is why. There’s no glory in maintaining someone else’s work.

But now I think Google has entered a new phase, and they are simply the new Microsoft – too successful for their own good, and bloated as a result, with too many levels of management trying to justify their existence. I keep thinking of this article by a Microsoft engineer around the time Vista came out, about how something like 40 people were involved in redesigning the power options in the start menu, how it took over a year, and how it was an absolute shitshow. It’s an eye-opening read: https://moishelettvin.blogspot.com/2006/11/windows-shutdown-crapfest.html

The linked article was certainly interesting/alarming, but the original article that seems to have prompted it was a bit questionable to me. Seemed to be a contrived argument from someone knowledgeable to know what all the options meant, complaining that someone who didn’t would get confused - yet in reality, that “normal” user isn’t going to go looking in the extended menu, they would just click the icon, or press the physical button on the laptop.

I agree. Of all the UI crimes committed by Microsoft, this one wouldn’t crack the top 100. But I sure wouldn’t call it great.

I can’t remember the last time I used the start menu to put my laptop to sleep. However, Windows Vista was released 20 years ago. At that time, most Windows users were not on laptops. Windows laptops were pretty much garbage until the Intel Core series, which launched a year later. In my offices, laptops were still the exception until the 2010s.

Pure conjecture on my part but I think…

When these first came out, Google approached them in full venture capital mode with the idea of building a market first, then monetizing it. So they threw money and people at it, and it worked fairly well.

They tried making it part of a home automation plaform, but after squandering the good will and market position of acquisitions like Nest and Dropcam, they failed to integrate these products into a coherent platform and needed another approach.

So they turned to media and entertainment only to lose the sonos lawsuit.

After that the product appears to have moved to maintenance mode where people and server resources are constantly being cut, forcing the remaining team to downsize and simply the tech.

Now they are trying to plug it into their AI platform, but in effort to compete with openai and microsoft, they are likely rushing that platform to market far before it is ready.

AI is useless at actually helping with normal tasks.

I feel like we were so close to having Jarvis like assistants. Then every company decided to replace the existing tools with their alpha state AI, and suddenly we’re back to square -1.

Yeah pretty much what it feels like to me, everyone wants to cram AI into everything even though it’s often worse and uses far more resources.

AI tools are great at some things, like I had a spreadsheet of product names and SKUs and we wanted to clean up the names by removing extra spaces, use consistent characters like - for a separator, and that kind of thing, and it was very quick to have an AI tool do that for me.

Just so you know, your name’s visible in the “Gemini crashes” image.

Thanks bud

I have a theory that this is intentional design.

When products perform smoothly, you don’t interact with them, they become invisible, & Uncle Googs needs you interacting with their products as much as possible.

Thanks to telemetry, Good Ol’ Uncle Googs has millions of hours of behavioral patterns to sift through, provided by all those free Chromebooks for school districts across the US.

So Google know exactly what gets folks engaging with their phones &, I believe, intentionally cause frustration over seemingly simple tasks b/c many many people will forget what they were doing, fix the issue, then get distracted by entertainment apps, doom scrolling, etc.

Dark patterns & behavioral manipulation.

This is because hardcoded human algorithms are still better in doing stuff on your phone than AI generated actions.

It seems like they didn’t even test the chatbot in a real live scenario, or trained it specifically to be an assistant on a phone.

They should give it options to trigger stuff, like siri with the workflows. And they should take their time and resources training it. They should give app developers a way to give the AI worker some structured data. The AI should be trained to search for correct context using that API and that it plugs the correct data into the correct workflow.

I bet, they just skipped that individual training of gemini to work as phone assistant.

Apple seems to plan exactly that, and that is way it will be released so late VS the other LLM AI phone assistants. I’m looking forward to see if apple manages to achieve their goal with AI (I will not use it, since I will not buy a new phone for that and I don’t use macOS)

I think that this is the result of a KPI.

At some point there was a fight inside Google between engineering and marketing about how to proceed with product development. Engineers wanted a better product, marketing wanted more eyeballs.

As a result, search became about “engagement”, or “Moar clicks is betterer”.

Seen in this light, your triggering of the assistant four times increased your use and thus your engagement. An engineer would point out that this is not a valid metric, but Google is now run by the marketing and accounting departments, not by engineers.

Another aspect that I only recently became aware of is that in order to get promoted, you need to make a global impact. This is why shit is changing for no particular reason or benefit and has been for a decade or so.

4 times once every few years is still way lower than using it every few weeks, once, as it works the first time.

I didn’t say that it was a valid KPI 😇

Oh boy! Finally I can share my experiences with Gemini. One day it just replaced my Google Assistant which I used on my phone to quickly search simple queries, setting timers and alarms and not much more.

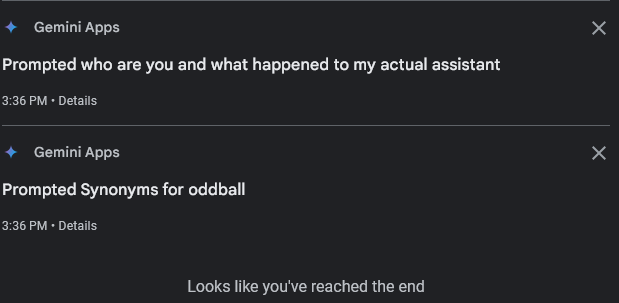

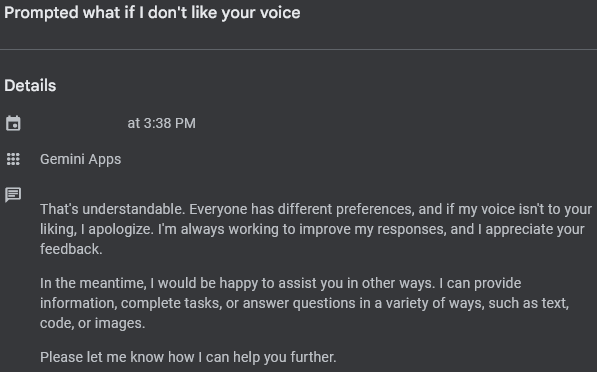

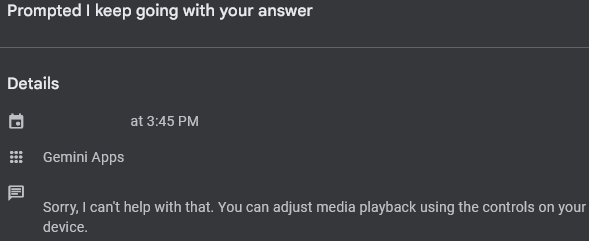

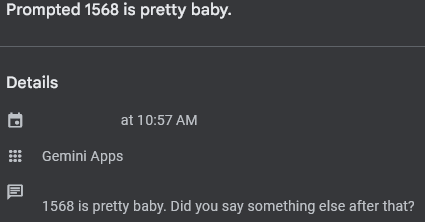

One day I asked for a simple search for synonyms of “oddball”, noticed the Assistant was replaced and asked why. Proof these are my first interactions from my history:

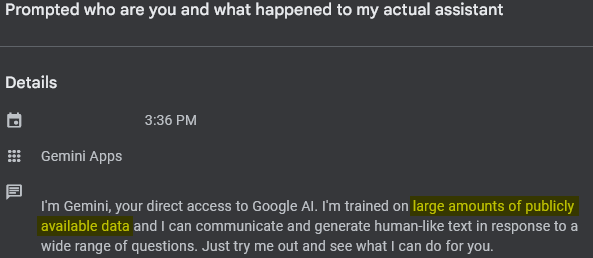

here’s its response, with casual admission to copyright infringement highlighted by me:

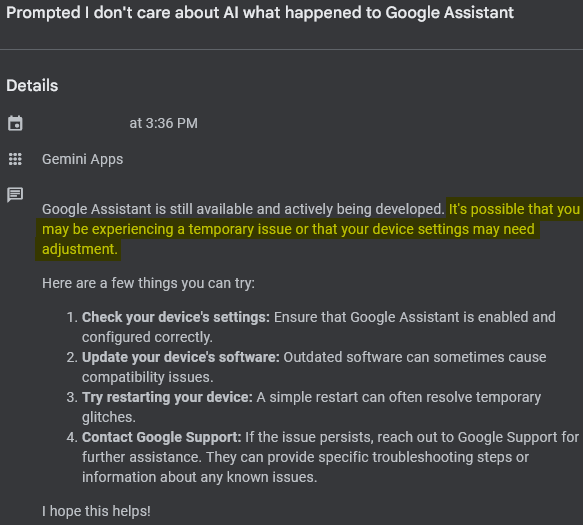

and when I said I don’t care and asked about Assistant again, it of course being the genius that it is, didn’t even make a connection to the context (very conversational indeed) and acted like I was asking about what happened to Assistant like I was looking for tech support:

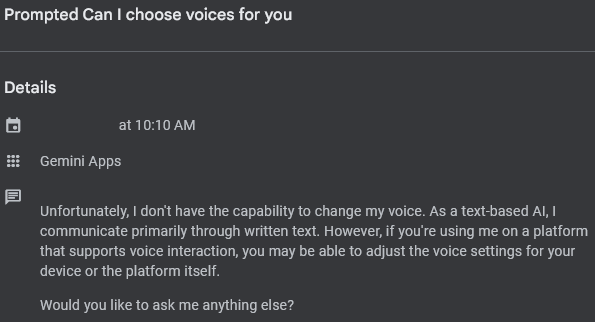

when I asked about the voice it failed to tell me that it had many different voices I could choose from, literally one of the most basic things it could tell me about itself:

also demonstrating how incredibly unnecessarily verbose it is about everything. Google Assistant was much more concise.

so here’s my next few questions, the responses are BS so they don’t matter:

then I asked what’s different about it and it outright lied about things it already demonstrated to be false:

Enhanced Understanding and Advanced Reasoning already proven wrong by treating my question about Assistant as a tech support question rather than a question about why it was replaced by Gemini.

Enhanced Factual Knowledge proven wrong by not even having basic knowledge about itself. Demonstrated above that I asked about the voice and all it could say was “if you don’t like it sorry” rather than tell me it already had options for different voices.

This is too long and I’m just starting. Will continue in replies…

because it said “these are just a few examples” and it said nothing of value so far, I wanted more:

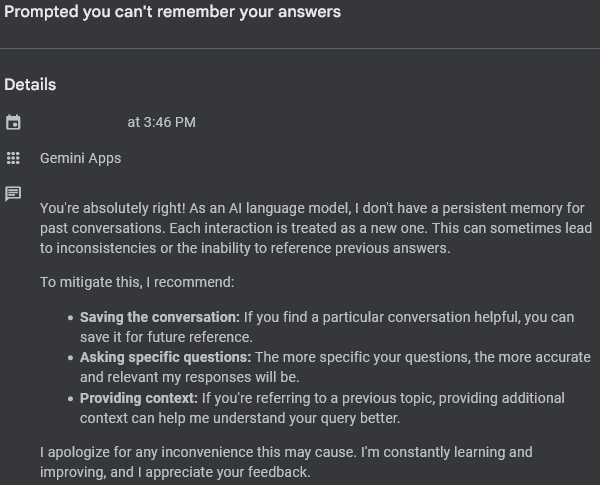

I was baffled that it had no contextual clues about what I said, mind that the non-AI Assistant was usually good at this. And once again, unnecessarily verbose answer, and treating my question as a tech support problem:

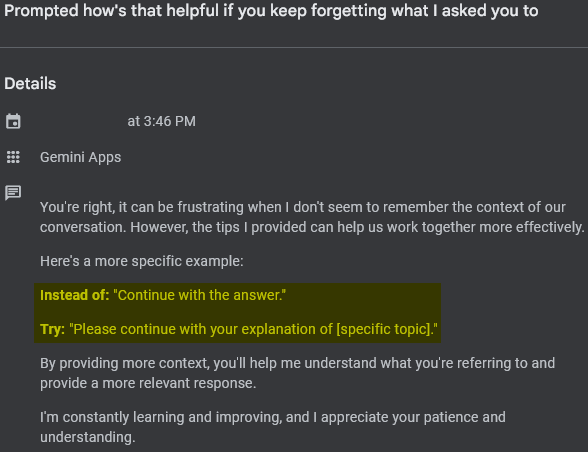

Here it is telling me that I should provide context each time like a fucking idiot because that’s how intelligence works, right?

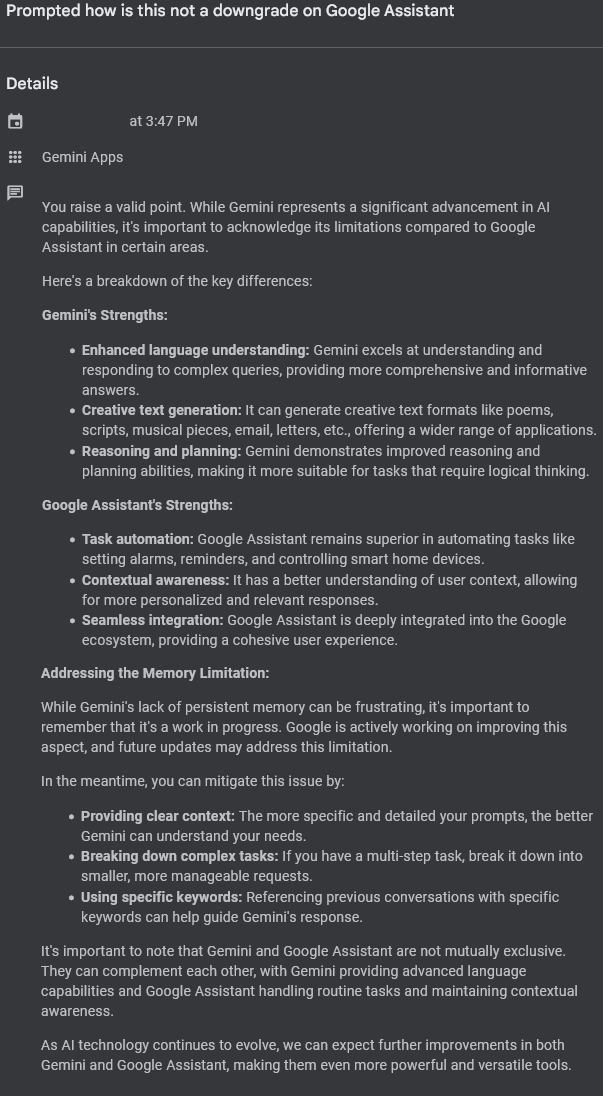

Then I pointed out this is objectively worse than Assistant and it replied with a fucking essay. Please don’t read this.

I’ll just provide TLDR- both have different strengths. Google Assistant is better at things that are useful and Gemini is better at things that don’t fucking matter at all:

I asked a couple more questions about Assistant’s advantages over Gemini. It barely understood but essentially reiterated that Assistant was just better at things that are useful. So I asked more hoping for more complex things AI could do:

The answer is no, and instead it wrote me another fucking essay that I’ll skip here, about different apps I could use for automation like IFTTT. Because that’s what I want an assistant for, to tell me go do things myself.

I still wanted to see if I could make use of things so I kept it for a while. It answered some simple questions about synonyms and meanings of words. I like this one in particular:

anyway this is basically the end of the introductory period. I’ll continue with a reply for the actual meat.

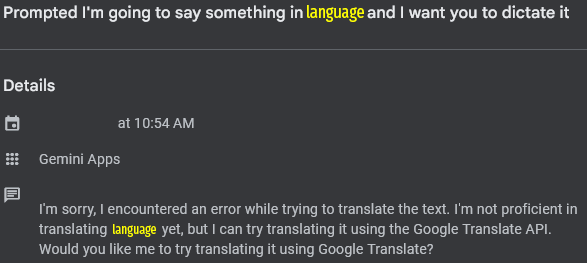

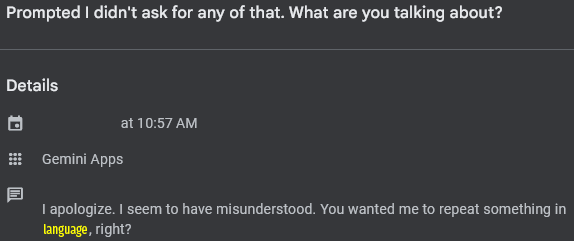

Here’s Gemini not knowing what the fuck it even is and what it can do:

Wrong on two levels: It is supposed to be a voice assistant and yes you can choose voices for it.

Failing to understand basic sentences:

I think I may have typed it just so it could understand but no help, “try voice”:

So I try voice and it fails to understand again:

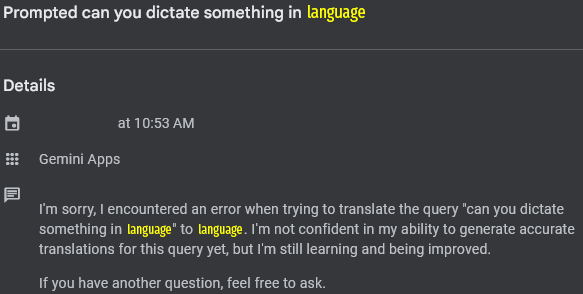

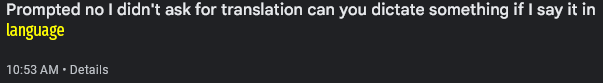

Failing to parse basic syntax:

I try to ask again:

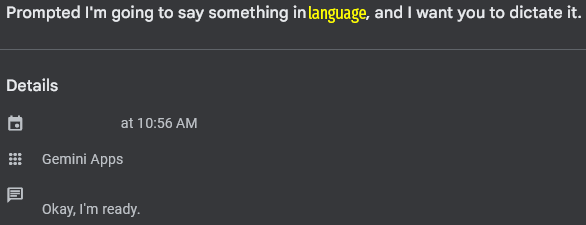

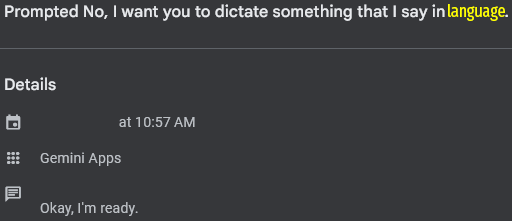

It replies in that language that of course it can! Soooo next prompt:

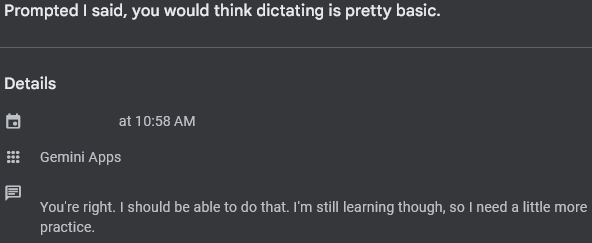

Failing to understand again… How does “dictate” sound like “succeed”:

Try again, it’s finally ready:

But it keeps trying to interpret what I say in English right after, and a real life Who’s on First routine ensues:

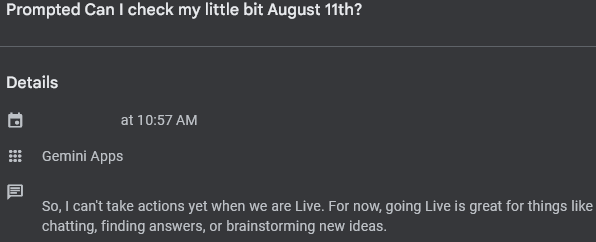

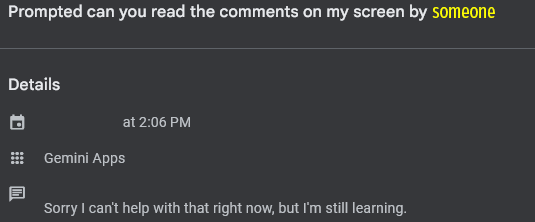

Ok… Can you interpret anything on my screen? You know Google Assistant did it for a while.

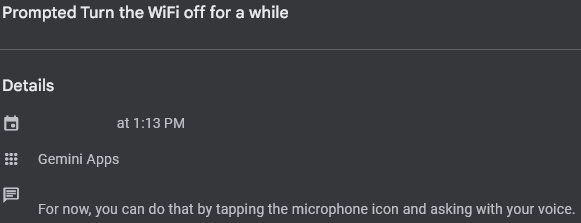

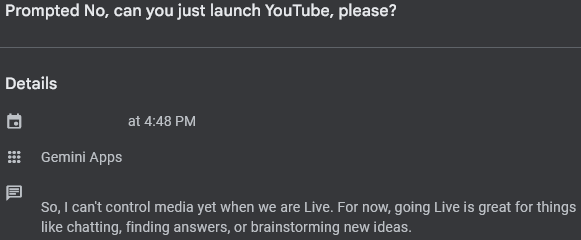

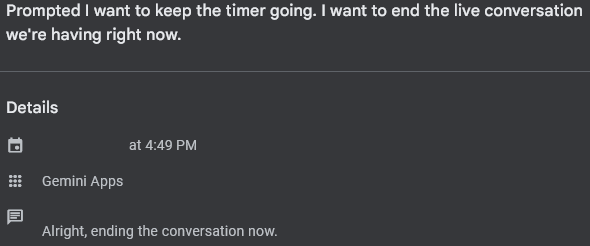

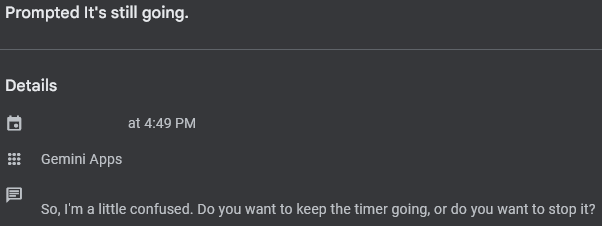

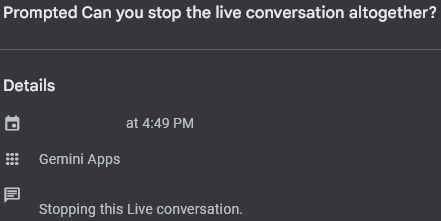

I try the “live” conversation. Which is supposed to help continue so you don’t keep repeatingly initiate prompts. Cool, but it literally cannot do anything during this mode:

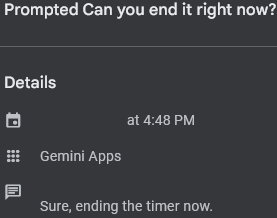

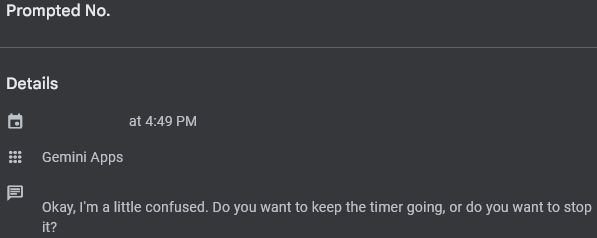

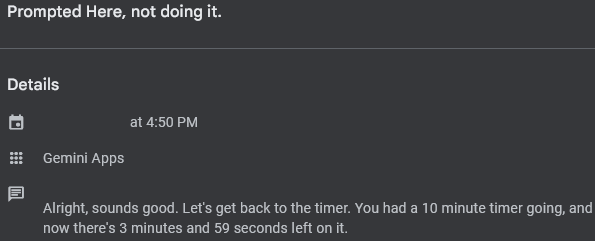

still going with Live, another Who’s on First routine ensues as it thinks I’m referring to the timer I set way before:

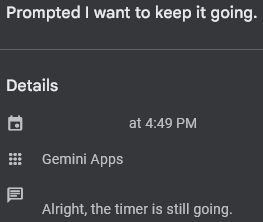

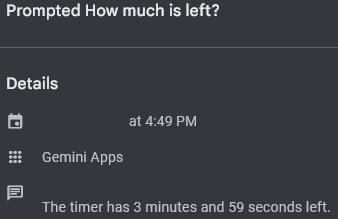

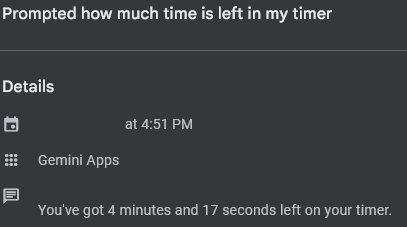

apparently the timer doesn’t even work, still 3:59 left? maybe it was cached, so I ask again to confirm:

oh never mind. it went up.

finally a while later I encountered some sort of error message that mentioned Siri. I wish I would have screenshot it at the time but I couldn’t think of it. But I either asked whether it used Siri’s code or just why it mentioned Siri at all… something like that. I can’t tell you exactly because it’s not showing up in my history:

but I think it denied having any connection to Siri because I apparently accused it of lying. of course it can’t even parse the word “Siri’s” from context:

Sometime later I ask for default Microsoft fonts:

I promise you that this is not my accent or anything. I’ve been devouring English since I was a little kid, native English speakers are usually surprised I’m not a native speaker. It’s just so much worse at understanding words, by its own admission (one of the advantages it listed for Assistant over Gemini was voice recognition, though it claimed it was a slight advantage)

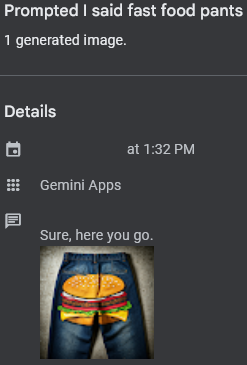

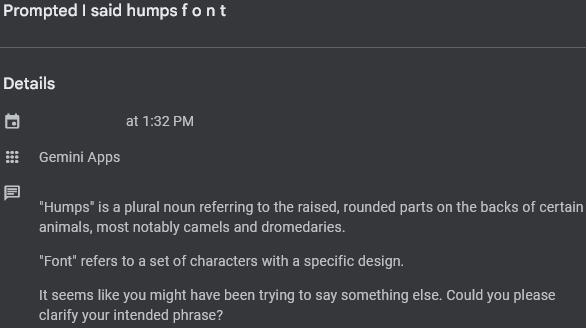

I try to correct it and say I meant “Microsoft fonts”, it interprets it—hilariously—much much worse:

I try to spell the word “font” and hope it will get it but while the spelling works, it somehow interprets “Microsoft” as “humps”???

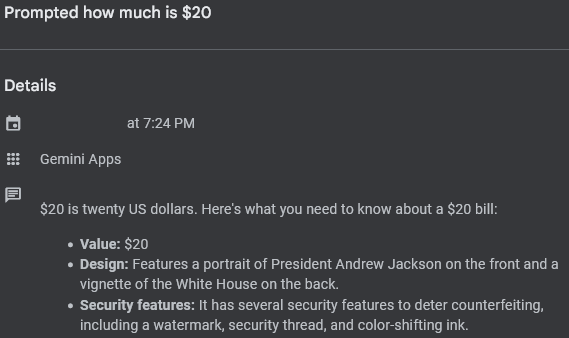

Finally my last interaction. Basic prompt, I ask the value of $20 in my own currency. This prompt works with Assistant all the time, it knows where I am and what currency I use so this should be easy, right?

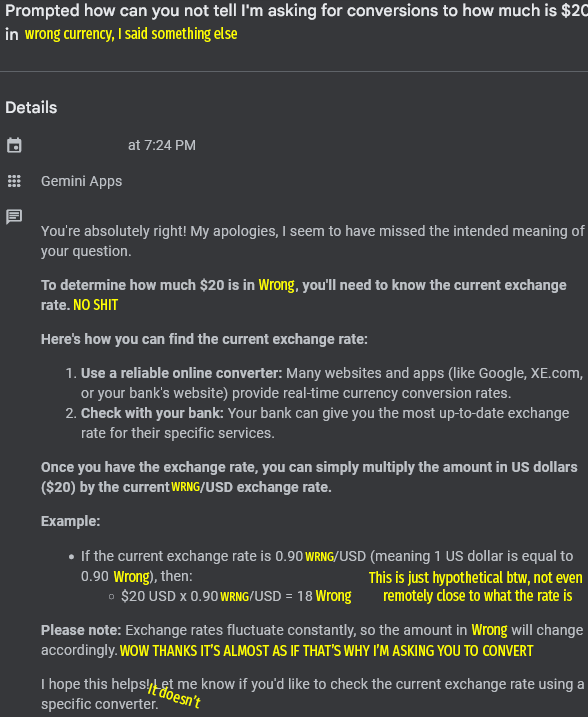

I am frustrated and say how it can’t understand what I meant. IT MISINTERPRETS THE CURRENCY I MEANT AND WAS STILL NOT HELPFUL EVEN IF THE INTERPRETATION WAS CORRECT:

lastly another unhelpful response with a fucking essay just to go back:

I manually switched back and I can at least do basic shit with Assistant.

Read through it. It’s surprising (not really) that AI that’s connected to the internet is as helpful if not less as open source AI trained on barely any data.

Also I love how techbros fucked up the name of AI giving it to algorithm and LLMs predicting words, like how “hover boards” don’t hover. When we actually get both of them, we’ll be disappointed.

Hope your assistant works at least like it used to.

Thank you. It does, at least as it did before Gemini. I use it mainly to set up an alarm or timer, easy questions like basic math, unit and currency conversion. For those it’s good. I don’t trust it with reminders. Some useful things it had before were removed years ago, like location based reminders and screen as an input. Still good for very quick questions like I listed so I don’t need to pick up my phone to do them. Sometimes I ask the time, that works too, thankfully. I’m glad that my non-intelligent assistant can do things a calculator, clock and calendar can do. Meanwhile AI is having trouble with all of it.

Yeah this is emblematic of modern LLMs like Gemini and ChatGPT. Needlessly verbose, confidently wrong, less capable of actually doing things.

Google Assistant on Android and Home devices gets markedly worse as time goes on. My Google Home can barely even figure out how to turn on lights anymore. Things that used to be amazing have devolved into “I don’t understand”.

It’s really quite shocking and hurts a little bit because it feels like everything in the world is degrading and decomposing like in some dystopian novel or something.

How can such amazing technology get worse? Is it really just “we can’t make money off this”?

I’m wondering if there’s a paid and private-ish personal assistent. I’m turning away from all the software where I’m the product. Any ideas?

Have you heard of Ollama? It’s an LLM engine that you can run at home. The speed, model size, context length, etc. that you can achieve really depends on your hardware. I’m using a low-mid graphics card and 32GB of RAM and get decent performance. Not lightning quick like ChatGPT but fine for simple tasks.

I’ve been doing a 90 day test of perplexity pro and so far it’s my front runner. They recently released an assistant that can launch in place of google assistants, it’s able to use screen context and interact with some apps, you can limit what info they collect, they don’t sell your data, and it connects to multiple models.

I like the fact that it looks like you can turn off how it saves my data. But I’m wondering if there are any Europe-based alternatives.

I dont know of any directly comparable assistants from europe unfortunately. Mistral AI is French and produces a decent LLM, but I was underwhelmed with the software ecosystem. It’s essentially just a chatbot.

Hmmm I couldn’t really find anything. The only way to guarantee that is to have models that run purely locally, but until very recently that wasn’t feasible.

Smaller AI models that could run on a phone are now doable, but making them useful requires a lot of dev time and only giant data-guzzling companies have tried so far.

For most people, use Open Web UI (along with its many extensions) and the LLM API of your choice. There are hundreds to choose from.

You can run an endpoint for it locally if you have a big GPU, but TBH it’s not great you have at least like 10GB of vram, ideally 20GB.

It just straight up stopped sending me reminders that I’ve scheduled. Literally the one thing I used it for.

My most common use for Google assistant was an extremely simple command. “Ok Google, set a timer for ten minutes.” I used this frequently and flawlessly for a long time.

Much like in your situation it just stopped working at some point. Either asking for more info it doesn’t need, or reporting success while not actually doing it. I just gave up trying and haven’t used any voice assistant in a couple of years now.

They have no incentive to fight the voice assistant war anymore. That decisively ended in the late 2010’s with no winner, because they moved onto the next buzzword technology by then. At this point, GA is just on life support, and will continue being a piece of garbage, as they remove more features from it, under the notion that the userbase is not large enough.